The War Quants Counter-UAS Primer: Sensing

Part 2 of Breaking the UAS Kill Chain

Bottom Line Up Front:

1. Sensor performance varies dramatically with environment, latency, and target profile.

No sensor works perfectly on its own; performance modeling helps balance cost, coverage, and constraints.

2. Layered sensor networks with multiple sensor types can overcome environmental and target profile limitations and are crucial for detecting and countering small UAS threats. Radar, RF, EO/IR, and acoustic systems each bring strengths, but integrated fusion delivers superior operational awareness.

3. Commanders need simulation-based planning tools for CUAS defense to understand these trade-offs and sensor requirements.

The Sensor Layer: Seeing the Swarm Before It Strikes

The first step in defeating a drone threat is detecting it. However, the challenge of detecting small UAS (sUAS), which are often quiet, slow, and low-flying, introduces a layer of complexity that traditional air defense systems were not designed to handle. Environmental clutter, such as bird flights, can confuse sensors and lead to false positive detections. Modern counter-UAS systems require a layered sensor architecture that combines multiple modalities, including RF, radar, acoustic, EO/IR, and data fusion, to integrate all these components. Modeling and simulating these systems-of-systems further helps users optimize their employment. This article is part 2 of the War Quants CUAS Primer, and dives into modeling CUAS sensors on modern battlefields.

The Detect, Track, Identify, and Defeat (DTID) Cycle

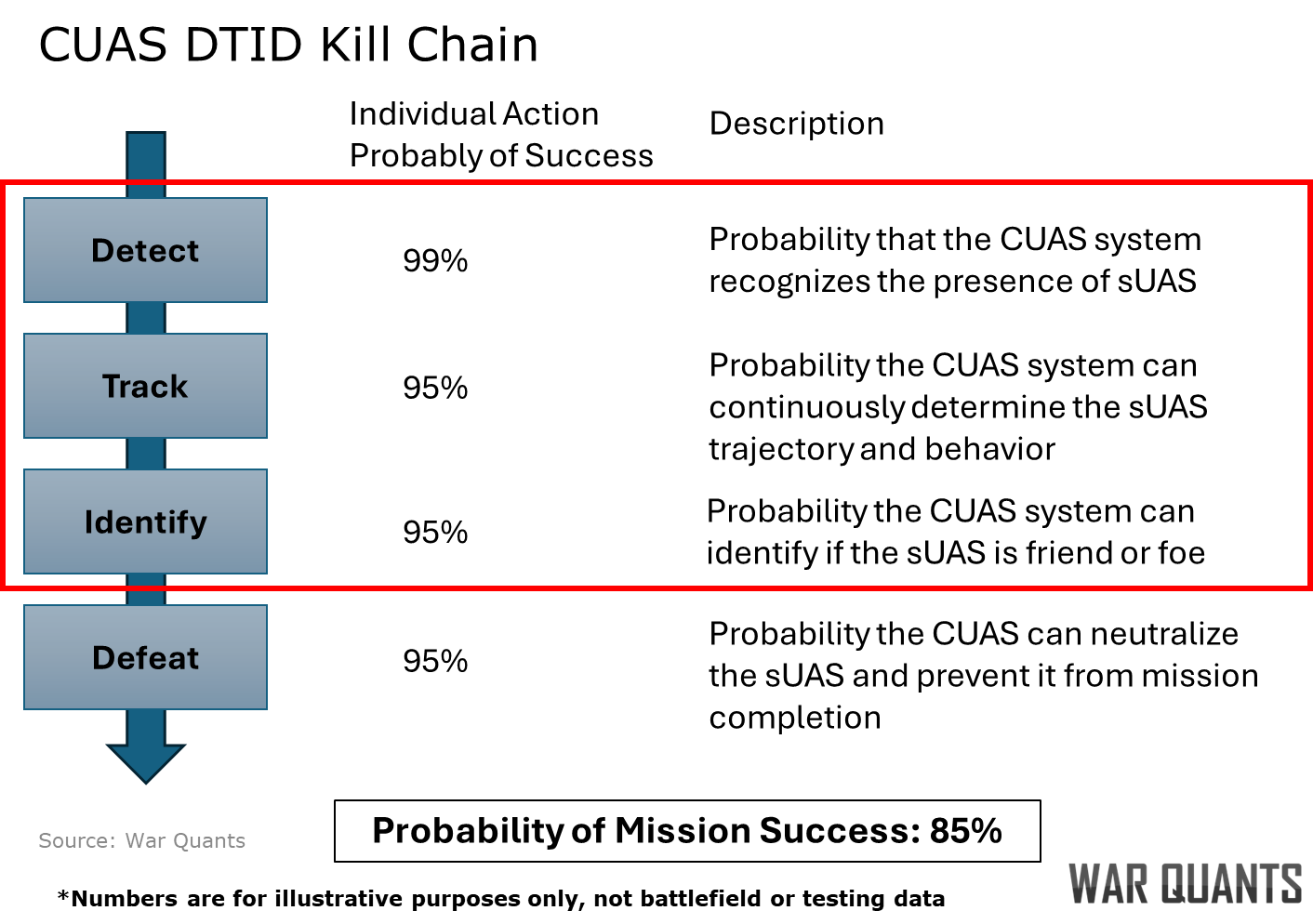

The CUAS kill chain can be simplified into four basic steps: detect, track, identify, and defeat. These four steps are outlined in Marine Corps CUAS documents and align closely with the F2T2EA targeting cycle introduced in the introduction to this primer. The cycle, summarized below, requires sensors and coordinated data fusion between them to detect, track, and identify sUAS threats.

The DTID process can serve as a basic model for predicting the probability of success in a CUAS engagement. To achieve a successful engagement, a system must first detect the target, establish a track, identify it, and then conduct a successful engagement. Modeling all of these as independent events, each with its own probability of success, allows analysts to derive the overall probability of success, as shown in the figure below.

In the case shown, the CUAS system has a 99% probability of detecting, a 95% chance of tracking, and a 95% chance of identifying a sUAS. When coupled with a 95% defeat probability, the overall chance of defeating a sUAS is 85%. This probability may seem high; however, it corresponds to only defeating six sUAS on average before one gets through. Future CUAS systems will require significantly higher success rates.

Types of CUAS Sensors

CUAS sensors fall into one of two major categories: passive sensors, which do not emit energy, and active sensors, which emit energy. Under these two broad categories, a variety of sensor options are available.

Passive Sensors:

Visual observation remains a foundational element in CUAS operations, particularly in environments where electronic sensors are degraded or unavailable. As emphasized in the U.S. Army’s ATP 3-01.81 Counter-Unmanned Aircraft System Techniques, trained air guards serve as frontline detectors, providing critical early warning and visual confirmation of aerial threats. These observers, equipped with binoculars or spotting scopes, often work in coordination with RF and acoustic detection systems, cueing their optics toward probable threat axes for rapid identification. Human observers are especially valuable in complex terrain such as urban environments or dense vegetation, where electronic line-of-sight is obstructed and drones may fly below radar coverage. Visual observation also offers a zero-emission profile, which is critical in low-signature operations, and enables high-confidence identification of drone type, payload, and intent. However, the effectiveness of visual observation is constrained by daylight, weather conditions, and the cognitive and physical demands placed on personnel. Despite these limitations, visual observation remains indispensable, particularly for small-unit defense and forward-deployed formations that lack advanced sensor suites.

Acoustic detection systems offer a passive, cost-effective method for identifying and tracking drones by capturing their unique propeller and motor sound signatures. These systems utilize microphone arrays to detect these acoustic signatures, which are then analyzed using machine learning algorithms to classify drone types, such as distinguishing between different sUAS. Triangulation techniques, based on the time-difference-of-arrival (TDOA) from multiple microphones, enable the calculation of a drone’s bearing and range. While acoustic detection is particularly effective in quiet, rural environments, its performance can be degraded in noisy or windy conditions.

A notable example of acoustic detection in practice is Ukraine’s deployment of a nationwide network of nearly 10,000 acoustic sensors, known as “Sky Fortress.” Developed by two Ukrainian engineers, this system uses inexpensive sensors—each costing between $400 and $500—to detect the acoustic signatures of incoming drones, such as the Iranian-made Shahed-136. The data collected is processed centrally and disseminated to mobile fire teams equipped with anti-aircraft weapons, enabling them to intercept drones effectively. This low-cost, scalable solution has garnered interest from the U.S. military and NATO allies as a model for affordable air defense strategies.

Electro-Optical/Infrared (EO/IR) systems are line-of-sight sensor modalities that enable visual confirmation, battle damage assessment, and target handoff in a CUAS system. EO systems, typically cued by RF or radar sensors, provide real-time daylight tracking by detecting motion and shape contrast against background terrain. Infrared sensors enhance this capability by detecting the heat signatures of drone motors and onboard electronics, particularly during early morning or nighttime operations. Modern multi-spectral sensor balls mounted on vehicles, towers, and UAS platforms offer stabilized gimbals with automated tracking, often integrating both EO and IR feeds to increase detection probability. Often, systems integrate machine or computer vision into EO/IR video feeds to automate target detection and identification. Weather, obscuration, and poor lighting conditions can challenge detection, while edge computer vision requires significant power and computer processing resources.

Radio Frequency (RF) detection is a cornerstone of modern counter-UAS (C-UAS) systems, offering a non-emissive, passive means of identifying threats. Passive RF detectors monitor common control and telemetry frequencies, such as 2.4 GHz and 5.8 GHz, to detect command-and-control (C2) links and first-person view (FPV) video feeds—especially effective against manually piloted drones. Advanced systems use protocol-aware decoders that can extract metadata, such as drone IDs and model types, even from encrypted links, leveraging proprietary signature libraries and radio frequency machine learning (RFML). RF triangulation networks geolocate both drones and their operators by correlating direction-of-arrival data from multiple nodes, which is critical for identifying swarm launch points or C2 hubs. Additionally, spectrum analysis tools can isolate anomalous RF activity in congested electromagnetic environments to flag potential threats. While RF-based systems excel in long-range early warning, they are less effective against autonomous or tethered drones that emit minimal or no RF signals. For example, the Ukrainian and Russian militaries both utilize fiber-optic-controlled, one-way attack drones that cannot be detected with passive RF.

Active Sensors:

Radar systems provide an essential layer of CUAS capability by detecting airborne threats in all-weather, day-and-night conditions. As noted in Principles of Naval Weapon Systems, radar excels at identifying isolated targets against low-clutter backgrounds. Modern short-range active radars—operating in Ku-, X-, and Ka-bands—can be specifically designed to detect and track small, low radar cross-section (RCS) drones. These systems often incorporate low-Doppler filters to track slow-moving or hovering sUAS, a common signature of Group 1–2 threats. Passive radar, meanwhile, utilizes ambient signals, such as FM radio, television broadcasts, or LTE towers, to detect reflections, enabling stealthy detection in electronically contested environments without emitting its own signals. Despite these advantages, radar performance can degrade in low-altitude cluttered environments (e.g., urban terrain) and is inherently constrained by the small RCS of many drones.

Software & Signal Fusion

Software and signal fusion form the backbone of scalable, adaptable CUAS networks by enabling enhanced coordination across diverse sensor modalities. Fusion engines integrate inputs from radar, RF, EO/IR, and acoustic sources to produce a unified threat picture, improving detection accuracy and reducing latency through automated cross-cueing. These systems prioritize and track threats dynamically, assigning confidence scores and optimizing sensor tasking in real-time. Advanced AI/ML models further enrich the process by classifying drone roles, such as ISR versus attack, based on behavioral patterns, emissions, and trajectory, while also flagging anomalies like swarm pre-launch signatures. This multilayered integration enhances tactical agility and decision speed across echelons, making fusion software a force multiplier in complex environments. However, these benefits come with significant demands: multisensor fusion systems require extensive integration, substantial bandwidth for real-time data transmission, and high-performance edge computing to process inputs without introducing operational lag. Despite these challenges, fusion remains essential for modern C-UAS operations, particularly as threat volumes and complexity scale beyond what any single sensor can manage.

Modeling Detection, Tracking, and Identification

Modeling detection, tracking, and identification in a counter-UAS architecture underscores a key operational reality: no single sensor provides comprehensive coverage against the full range of sUAS threats. The simple DTID model presented earlier can benefit from additional rigor in capturing the complexities of detection, tracking, and identification.

Each modality—RF, radar, EO/IR, acoustic—brings distinct advantages but also inherent limitations. Mission resilience requires a distributed sensor network that can absorb environmental noise, scale with the volume of threats, and adapt to the diversity of drones being fielded. Integration requires both building networks of sensors and managing tradeoffs:

Latency: The time from initial detection to engagement cueing is measured in seconds, and at short ranges, in milliseconds; delay directly impacts the probability of defeat.

Signature Tradeoff: Active emitters, such as radar or laser rangefinders, risk exposing friendly positions to adversary electronic warfare systems that can cue enemy fire assets for strikes.

Environmental Limits: Weather, terrain, and background noise can degrade acoustic and optical performance, especially at low altitudes.

Coverage and Cost: No single sensor field can provide 360-degree coverage across varied ranges without creating budgetary and logistical burdens.

No C-UAS sensor network is perfect, but they become exponentially more effective when built around principles of redundancy, low-latency cueing, and intelligent data fusion. The faster a threat is detected, classified, and handed off, the more likely a kinetic or non-kinetic response will disrupt its kill chain before it can be executed. In a high-tempo drone environment, timing is decisive.

Evaluating CUAS Sensor Architectures Through Simulation

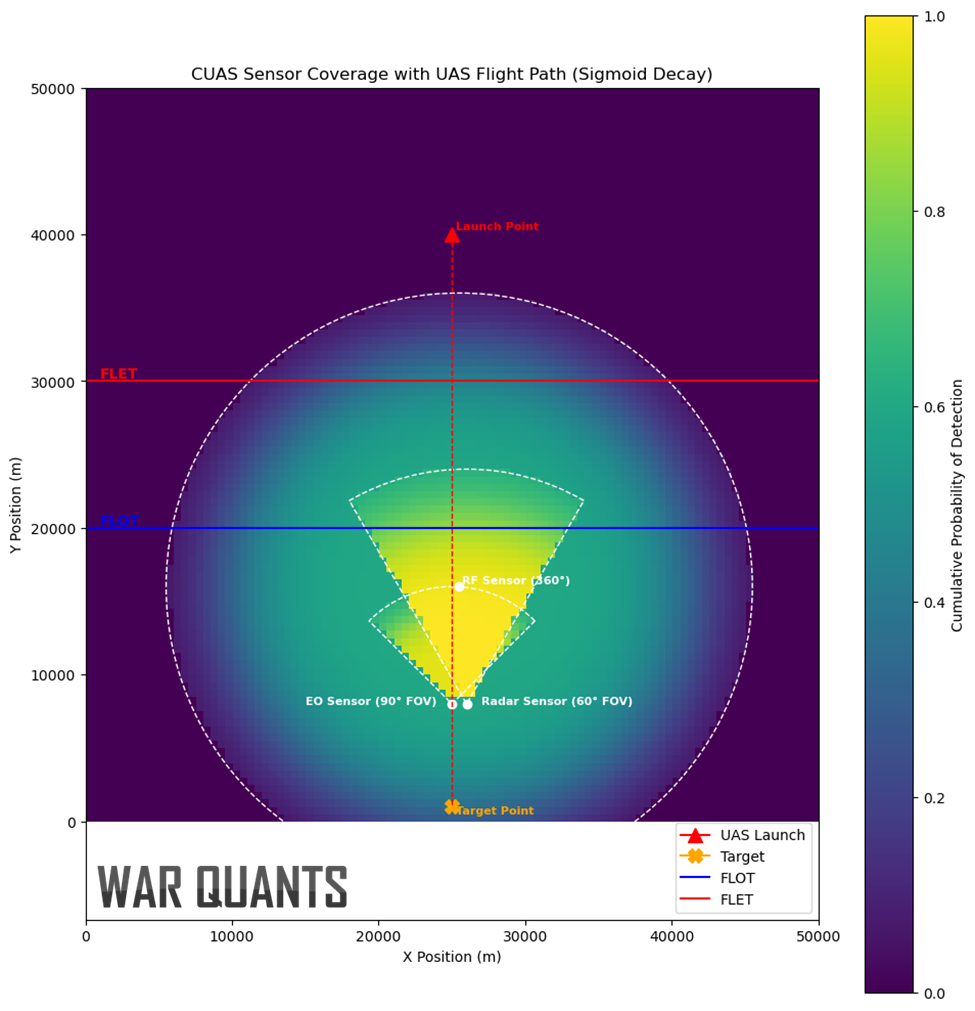

To understand sensor employment, analysts can use simple detection probability grids to test different sensor configurations and capability requirements. Detection probability grids split the battlefield into quadrants and estimate detection probability based on sensor availability and laydown. Similar to a fire plan sketch, they allow commanders to array their sensors to best mitigate the sUAS threat. Consider the following scenario: two adversaries face off along a relatively static front line defined by a forward line of friendly troops (FLOT) and enemy troops (FLET) with a no-man’s land in between. The adversary force launches an sUAS from behind the FLET to target critical infrastructure behind the FLOT. In this scenario, the friendly force has a passive RF sensor near the FLOT that detects the sUAS as it passes the FLET. That passive sensor then cues an active radar that tracks the sUAS as it moves over the FLOT towards the target point. Finally, a passive EO sensor identifies the adversary’s sUAS, setting conditions for defeat. The three sensors integrate into the CUAS kill web.

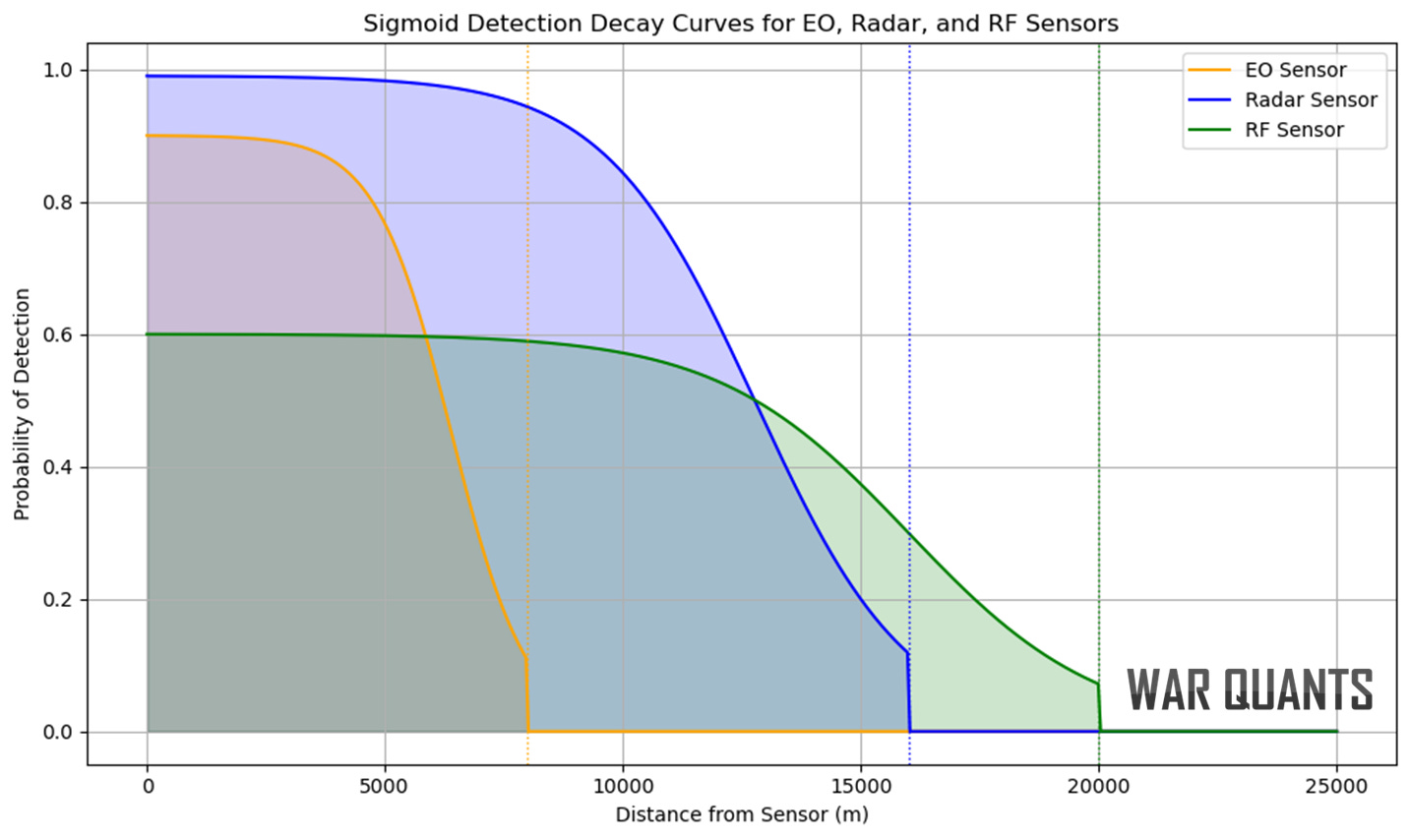

We can compare the sensors using probability of detection curves that show how sensor range affects detection. In this case, the curves compare detection starting from the same point for relative comparison.

This example uses sigmoid detection-decay curves, where detection slowly decreases and then drops off quickly, reaching zero at the maximum effective range for illustrative purposes.

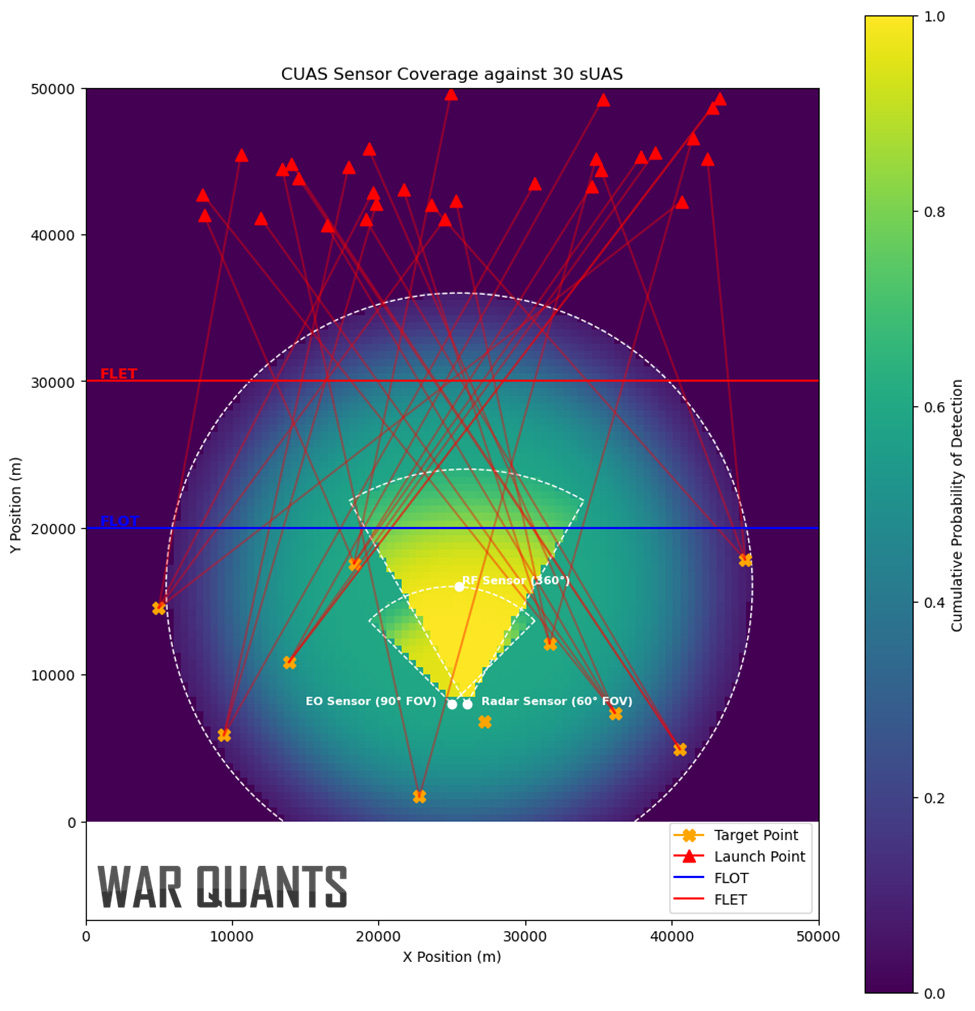

Now imagine this scenario at a larger scale with 30 adversary sUAS.

As the number of potential targets increases, the ability of limited sensors to cover every point generally decreases. In this case, 30 sUAS attack 10 targets. While the CUAS system may detect the adversary’s sUAS moving about the battlespace, tracking and identifying those that don’t pass through the radar and EO fields of view will likely be difficult with the single passive sensor.

Toward Smarter Sensing and Faster Decisions

Modern battlefields require more than just better antennas or isolated sensors. They demand an integrated sensing approach that combines various technologies into a single, responsive system. No individual sensor can fully defend against the growing variety of small drones. However, combining sensors through intelligent fusion and simulation-informed planning can close the gaps. As drones become faster, cheaper, and more autonomous, the performance of the sensor network will play a central role in determining outcomes. Smarter sensor placement leads to quicker decisions, and every second counts.

The views and opinions expressed on War Quants are those of the authors and do not necessarily reflect the official policy or position of the United States Government, the Department of Defense, or any other agency or organization.

AI supported track classification is one of the highest payoff capabilities we can be devoting resources right now. The amount of operator level time that is wasted on marking and interrogating tracks well outside the characteristics of any threat track is astounding